What are the ingredients for building generalist agents that learn through self-supervised exploration?

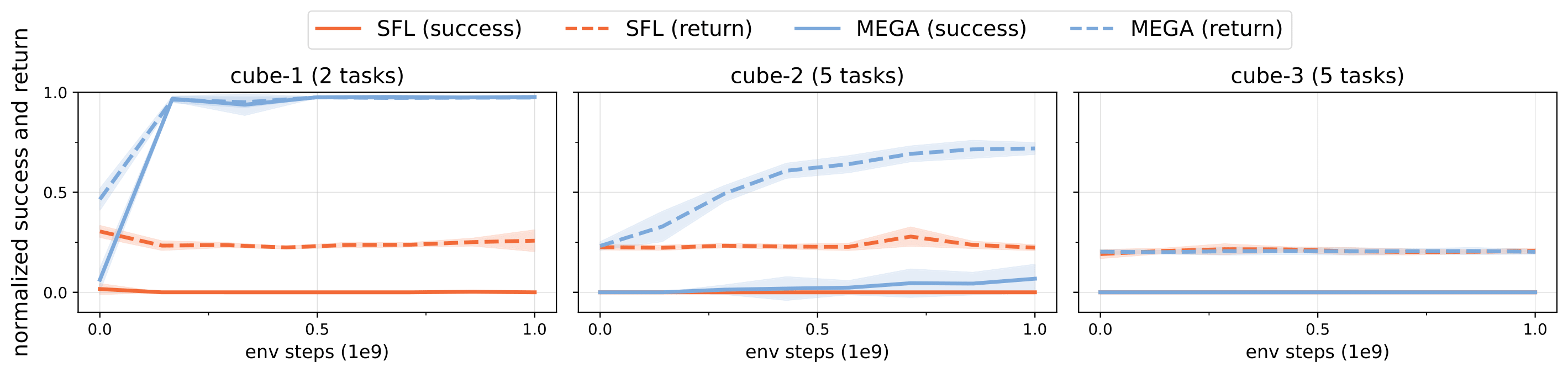

Most tasks in the self-supervised protocol remain unsolvable by the algorithms we tried. This is by no means an exhaustive evaluation.

It will be exciting to see which approaches lead to agents that can solve the most complex tasks purely through self-supervised trial and error.

Why do standard RL algorithms struggle to make progress on the more complex tasks?

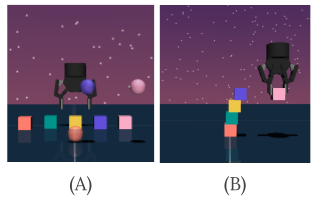

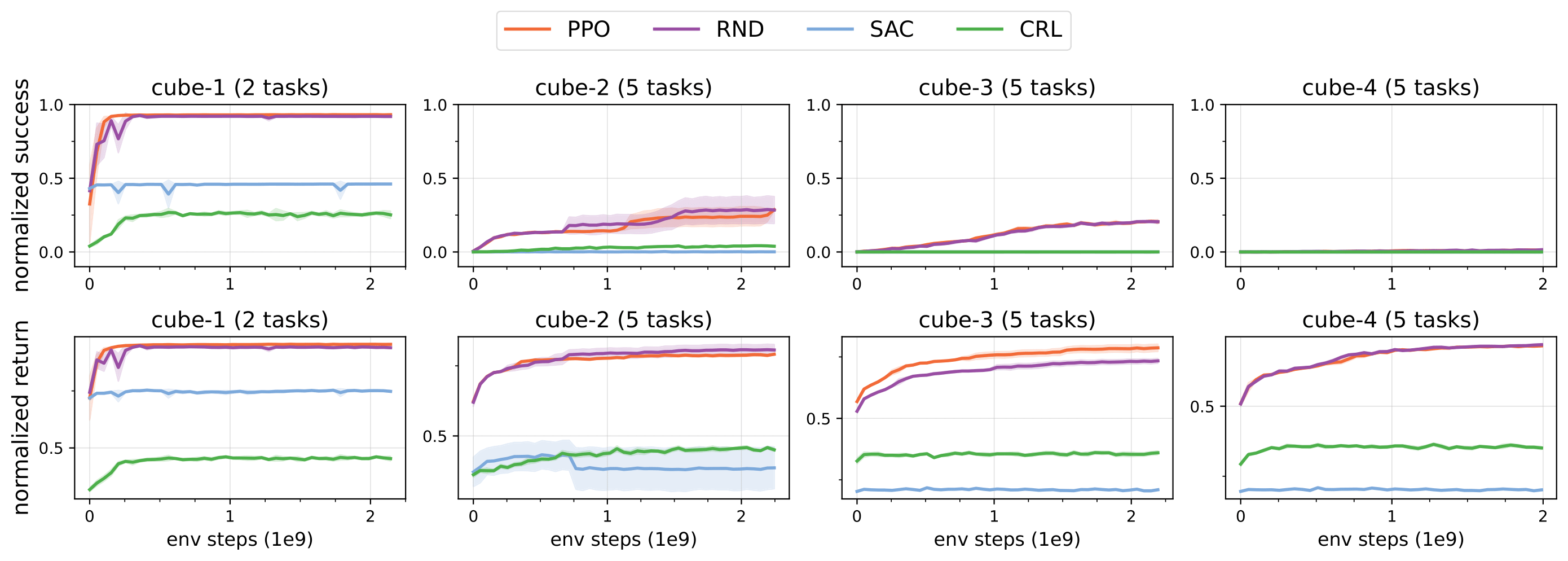

All RL algorithms we tried achieve a zero success in tasks with more than 4 cubes.

What are the primary reasons? Is it exploration, curse of horizon, model size, training steps, better hyperparameters or something else?

How to perform RL pretraining?

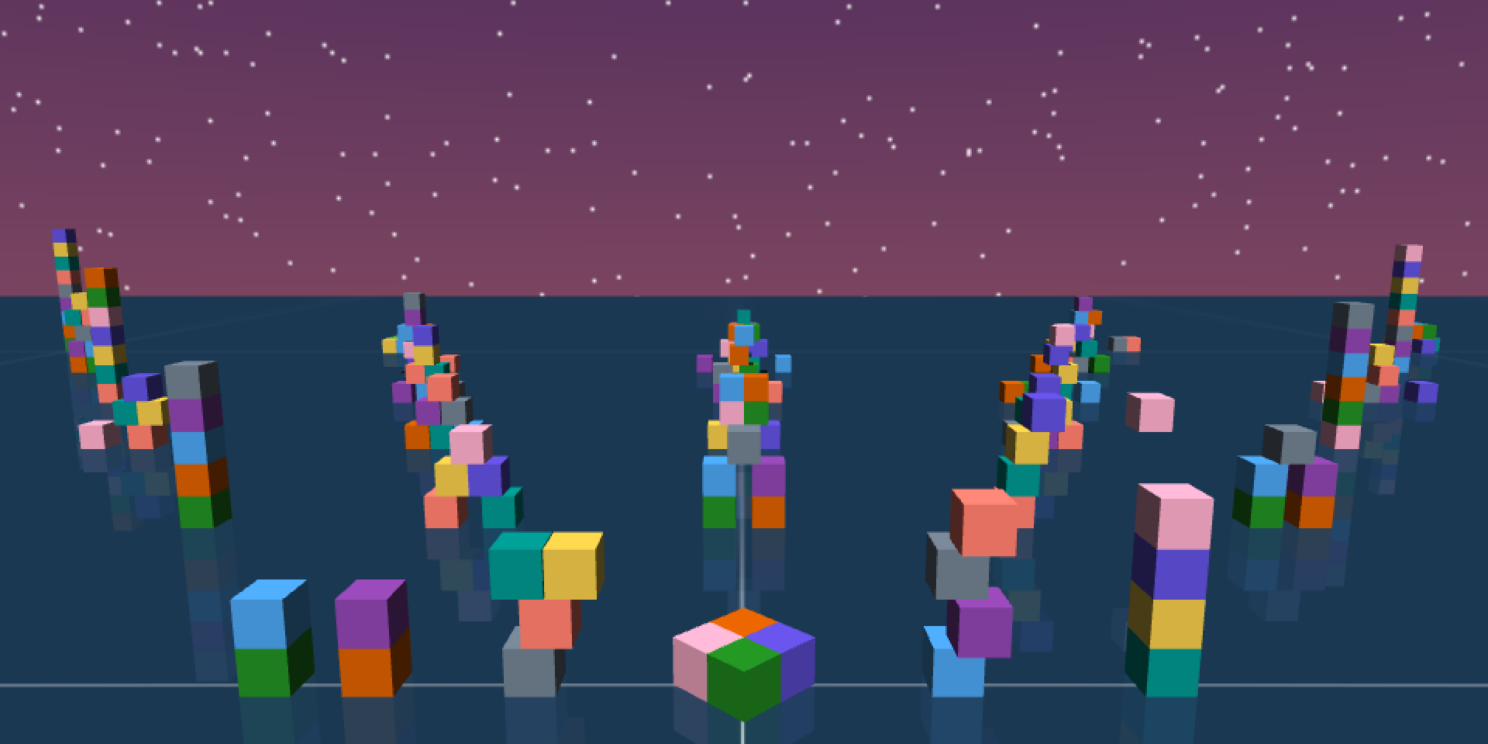

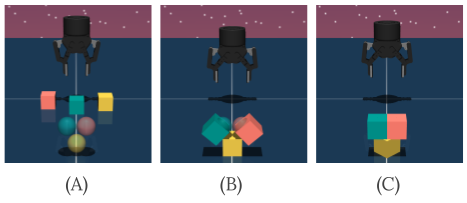

It is easy to come up with target structures that are stable and easy to build.

By easy we mean the tasks that can be solved by simple pick and place primitives.

This is in contrast to tasks that require unique reasoning skills (see our task-suite for more examples), which are not trivial to design and to solve.

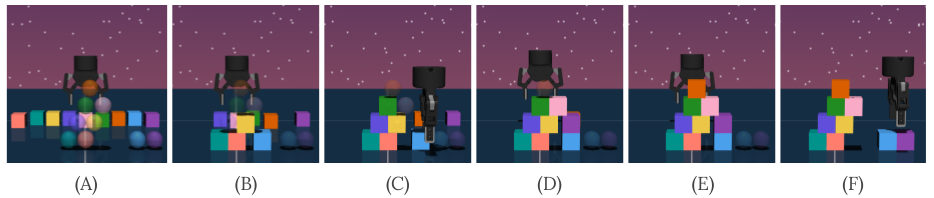

We can train multi-task RL agents using dense rewards to solve these easy to build tasks.

Will the pretrained model provide a better initialization for solving the novel / unsolvable tasks we really care about?

This is akin to pretraining in LLMs where low quality data is cheap, but data for novel / unsolvable tasks in not availble (by definition).

Two dimensional scaling.

The self-supervised protocol allows one to study scaling in two new dimensions.

Compute allocated to sampling autotelic tasks (exploration in tasks-space) and compute

allocated to solving the task via trial and error (exploration in trajectory-space).

How to optimally balance the two?

What is the scaling behavior of current self-supervised exploration algorithms?

While our results show that self-supervised exploration algorithms are not able to solve complex tasks, we have only made a preliminary evaluation.

BuilderBench allows one to study what is the scaling behavior of existing self-supervised algorithms.

We argue that the key bottleneck for scaling self-supervised algorithms is availability of suitable benchmarks.

Existing benchmarks rarely allow agents to practice skills ranging from exploration to prediction, from low-level control to high-level reasoning.

A new type of scaling law?

Typically, the x-axis in scaling laws corresponds to compute.

BuilderBench allows one to systematically put task hardness in the x-axis (build a pyramid from 1, 2, ..., blocks).

Can we reliably predict how RL algorithms scale with task hardness?

Why is the performance of off-policy RL algorithms (like SAC and CRL) so much worse than PPO?

In our experiments, we found that off-policy algorithms (SAC and CRL) were much worse in

terms of sample efficiency and final performance than PPO and RND, despite making more gradient updates.